Technology

I guess one can say my fascination with technology began with my toys as a child. My parents were frustrated giving me toys and I would take them (rather tear them up) a part to see how they ticked. As a teenage, I developed interest in electronics constructing a simple tone generating circuit that I made into a monophonic musical keyboard. This was in the era before the small computer.

I guess one can say my fascination with technology began with my toys as a child. My parents were frustrated giving me toys and I would take them (rather tear them up) a part to see how they ticked. As a teenage, I developed interest in electronics constructing a simple tone generating circuit that I made into a monophonic musical keyboard. This was in the era before the small computer.

I enjoy the benefits that technology brings to our lives. More so, I enjoy understanding much of the design and how it is utilized as well as its limits. Here are some of the areas of technology that I have worked with both at home and in the enterprise space. I hope that you may find a bit of knowledge from my own experience in these articles.

1 - Open Systems

Per Wikipedia, “Open Systems are computer systems that provide some combination of interoperability, portability, and open software standards. (It can also refer to specific installations that are configured to allow unrestricted access by people and/or other computers; this article does not discuss that meaning).” On these pages, these are articles that relate to Open Systems and Data Storage, particularly encompassing UNIX and Linux.

Introduction

My hardware experience is predominantly on SPARC and Intel hardware platforms though I’ve had light experience with PA-RISC and HP’s Power chipset. My greatest accomplishment here was to provide a configuration framework that provided consistency in a shared filesystem that had the same look and feel across platforms but managed executable binaries per platform. The user profile also played into this framework in that platform oddities were compensated in the enterprise-wide shared profile but featured the ability for the end user to customize their environment.

My practical OS experience is on Solaris (1.4.x to 2.10) and Linux/Redhat (Enterprise 3 through 6 (professionally), Fedora Core through current (at the moment - Fedora 33) at home. On the Linux front, I’ve used at various times other distros such as Ubuntu, SuSE, Linux Mint, Linux Cinnamon.

I have recently worked with Raspberry Pi as a mini server to see how viable that platform is for the comparitively cheap price fulfilling low performing system needs.

1.1 - Architecture

When I was in junior high, all the students were given some sort of IQ test. The counselor told me that my results indicated that I was a 3 dimensional thinker. That certainly flattered me. To work with any type of architecture, requires 3 dimensional thinking. I enjoy most doing architectural work. To design various interelated components, both hardware and software, to work together to service a client community is akin to a conductor directing an orchestra. This section contains articles on various subjects related to computing architecture.

1.1.1 - Primal Philosophy

Foundational thoughts on creating a computing architecture in a medium to large enterprise environment.

Introduction

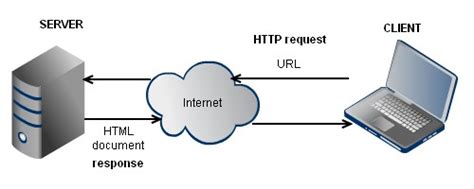

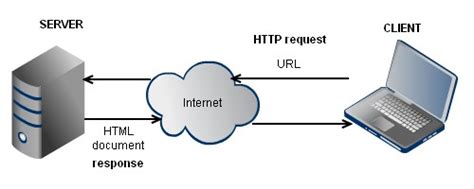

Back in the glory days of Sun Microsystem, they were visionary at that time when the Ethernet and TCP/IP was emerging as the universal network topology and protocol standard. Sun adopted the marketing slogan "the network is the computer" and wisely so. That is the way I have viewed computing from the time I started architecting networks of computers. It isn't about a standalone machine performing a specific localized task only, rather it is a cooperative service that ultimately satisfies a human need as it relates to a service and its related data.

Back in the glory days of Sun Microsystem, they were visionary at that time when the Ethernet and TCP/IP was emerging as the universal network topology and protocol standard. Sun adopted the marketing slogan "the network is the computer" and wisely so. That is the way I have viewed computing from the time I started architecting networks of computers. It isn't about a standalone machine performing a specific localized task only, rather it is a cooperative service that ultimately satisfies a human need as it relates to a service and its related data.

Through the years, I have been successful in tailoring an architecture that required few administrators to efficiently administrate hundreds of computers running on multiple hardware and OS platforms serving both high-end technical, business end user communities, desktop and server incorporating multiple platforms. I have found three areas that require a standard for administration (1) OS configuration (2) separate off data onto devices that are built for that purpose of managing data (i.e. NAS), developing a taxonomy that support its usage and (3) network topology.

OS and Software Management

Ninety five percent of the OS installation should be normalized into a standard set of configuration files that can be incorporated into a provisioning system such as Red Hat Satellite for Linux. Separating off the data onto specific data appliances frees up backups being performed on fewer machines and do not require kernel tweaks that satisfies both the application service running on the server versus handling backups. Considering that application software doesn't rely on a local registery as does MS Windows, the application software itself can be delivered to multiple hosts from a network share, thus making any given host more fault tolerant.

Ninety five percent of the OS installation should be normalized into a standard set of configuration files that can be incorporated into a provisioning system such as Red Hat Satellite for Linux. Separating off the data onto specific data appliances frees up backups being performed on fewer machines and do not require kernel tweaks that satisfies both the application service running on the server versus handling backups. Considering that application software doesn't rely on a local registery as does MS Windows, the application software itself can be delivered to multiple hosts from a network share, thus making any given host more fault tolerant.

The argument for “installation per host” is that if there is an issue with the installation on the network share, all hosts suffer. This is a bit of a fallacy. While it is true that if there is an issue, it breaks everywhere, but then again, you fix an issue in one place, you fix it everywhere. The ability to extend the enterprise-wide installation with minimal effort, maximizing your ability to administrate it outweighs the negative for breaking it everywhere. It takes discipline to methodically maintain a centralized software installation.

Data Management

Data should be stored on NAS (network attached storage) appliances as they are suited toward optimal data delivery across a network and gives a central source for managing it. Anymore, most data is delivered across a network. NAS appliances are commonly used (such as Netapp) to deliver a "data share" using SMB, NFS or SAN over FCoE protocols.

Data should be stored on NAS (network attached storage) appliances as they are suited toward optimal data delivery across a network and gives a central source for managing it. Anymore, most data is delivered across a network. NAS appliances are commonly used (such as Netapp) to deliver a "data share" using SMB, NFS or SAN over FCoE protocols.

In the 1990s, the argument against using an ethernet network for delivering data was due to bandwidth and fear for what would happen if the network goes down. Even back then, if you lost the network, the network was held together by backend services for identity management and DNS. In the 21st century, I always chose to install two separate network cards (at least 2 ports each) in each server. I configured at least one port per card together for a trunked pair. One pair would service a data network and the other for frontend/user access. This worked well over the years. Virtualizing/trunking multiple network cards provide a fault tolerant interface whether for user or data access, though I have never seen a network card go bad.

There are a handful of application software that requires SAN storage, though I would avoid SAN unless absolutely required. You are limited by the filesystem laid on the SAN volume and probably have to offload management of the data from the appliance serving the volume. Netapp has a good article on SAN vs. NAS.

Business Continuance/Disaster Recovery and Virtualization

There is the subject of business continuance and disaster recovery that plays into this equation. Network virtualization is a term that includes network switches, network adapters, load balancers, virtual LAN, virtual machines and remote access solutions. Virtualization is key toward providing support for fault tolerance inside a given data center as well as key toward providing effective disaster recovery. Virtualization across data centers simplify recovery when a single data center fails. All this requires planning, providing replication of data and procedures (automated or not) to swing a service across data centers. Cloud services provide a fault tolerant service delivery as a base offering.

There is the subject of business continuance and disaster recovery that plays into this equation. Network virtualization is a term that includes network switches, network adapters, load balancers, virtual LAN, virtual machines and remote access solutions. Virtualization is key toward providing support for fault tolerance inside a given data center as well as key toward providing effective disaster recovery. Virtualization across data centers simplify recovery when a single data center fails. All this requires planning, providing replication of data and procedures (automated or not) to swing a service across data centers. Cloud services provide a fault tolerant service delivery as a base offering.

The use of virtual machines is common place these days. I’ve been amused in the past at the administrative practice by Windows administrators who would deploy really small servers that only provided a single service. When they discovered virtualization, they adhered to the same paradigm, but providing a single service per virtual machine. Working with “the big iron”, services would be served off of a single server instance where those services required roughly the same tuning requirements and utilization and performance was monitored. With good configuration management, extending capacity was fairly simple.

Work has been done to virtualize the network topology so that you can deploy hosts on the same network world-wide. For me, this is nirvana for supporting a disaster recovery plan, since a service or virtual host can be moved across to another host, no matter the physical network the hypervisor attached without having to reconfigure its network configuration and host naming service entry.

Virtual networks (e.g. Cisco Easy Virtual Network - a level 3 virtualization) provides the abstraction layer where network segmentation can go wide, meaning span multiple physical networks providing larger network segments across data centers. Having a “super network”, disaster recovery becomes much simpler as the IP address doesn’t have to be reconfigured and reported to related services such as DNS is needed.

Cloud Computing

My last job as a systems architect, I had the vision for creating a private cloud, with the goal for moving most hypervisors and virtual machines into a private cloud. Whether administering a private or public cloud, one needs a toolset for managing a "cloud". The term "cloud" was a favorite buzzword 10 years ago that was not a definitive term. For IT management it usually meant something like "I have a problem that would be easier to shove into the cloud and thus solve the problem". (Sounded like the out sourcing initiatives in the 1990s). Any problem that exists doesn't go away. If anything the ability to manage a network just became more complicated.

My last job as a systems architect, I had the vision for creating a private cloud, with the goal for moving most hypervisors and virtual machines into a private cloud. Whether administering a private or public cloud, one needs a toolset for managing a "cloud". The term "cloud" was a favorite buzzword 10 years ago that was not a definitive term. For IT management it usually meant something like "I have a problem that would be easier to shove into the cloud and thus solve the problem". (Sounded like the out sourcing initiatives in the 1990s). Any problem that exists doesn't go away. If anything the ability to manage a network just became more complicated.

There has been various proprietary software solutions that allows the administrator to address part of what is involved with managing a cloud whether for standing up a virtual host or possibly carving out data space or configure the network. OpenStack looks to be hardware and OS agnostic for managing a private and public cloud environment. I have no experience here, but looks to be a solution that the hardware manufacturers and OS developers have built plugins as well as integration with the major public cloud providers.

Having experience working in a IaaS/SaaS solution, utilizing a public cloud is only effective with small data. Before initiating a public cloud contract, work out an exit plan. If you have a large amount of data to move, you likely will not be able to push it across the wire. There needs to be a plan in place, possibly a contractural term to being able to physically retrieve the data. Most companies are anxious to enter into a cloud arrangement but have not planned for when they wish to exit.

Enterprise-Wide Management

There is the old adage that two things are certain in life - death and taxes. Where humans have made something, whether physical or abstract, one thing is certain - it is not perfect and will likely fail at some time in the future. Network monitoring is required so the administrators know when a system has failed. Stages for implementation should include server up/down monitoring followed by work with adopting algorythms for detecting when a service is no longer available. From there, performance metrics can then be collected and work to aggregate those metrics and threshholds into a form that provides support for capacity planning and measure whether critical success factors are met or not.

There is the old adage that two things are certain in life - death and taxes. Where humans have made something, whether physical or abstract, one thing is certain - it is not perfect and will likely fail at some time in the future. Network monitoring is required so the administrators know when a system has failed. Stages for implementation should include server up/down monitoring followed by work with adopting algorythms for detecting when a service is no longer available. From there, performance metrics can then be collected and work to aggregate those metrics and threshholds into a form that provides support for capacity planning and measure whether critical success factors are met or not.

Another thought toward capacity management. Depending on the criticality of the service offering, the environment should provide for test/dev versus production environments. Some services under continual (e.g. waterfall) development could require separating out test and dev environments in order to stage for a production push.

Provisioning tools are needed to perform quick, consistent installations whether loading an OS or enabling a software service. At a minimum, shell scripts are needed to perform the low-level configuration. At a higher level, software frameworks like OpenStack and Red Hat Satellite are needed to manage a server farm for more than a handful of servers.

Remote Access

Remote access has been around in various forms for the past 20+ years and is becoming a critical function today. VPN (virtual private network) is the term associated with providing secure packet transmission over the extranet. While a secure transport is needed, outside of public cloud services, there is the need for an edge service that provides the corporate user environment "as if" they were inside the office.

Remote access has been around in various forms for the past 20+ years and is becoming a critical function today. VPN (virtual private network) is the term associated with providing secure packet transmission over the extranet. While a secure transport is needed, outside of public cloud services, there is the need for an edge service that provides the corporate user environment "as if" they were inside the office.

Having worked in a company that had high-end graphical workstations used by technical users requiring graphics virtualization and high data performance, we worked with a couple solutions that delivered a remote desktop. NoMachine worked well but we migrated toward Nice Software (now an Amazon Web Service company). At the time we were looking at not only providing a remote access solution, but also a replacement for the expensive desktop workstation while providing larger pipes in the data center to the data farm. Nice was advantageous for the end user in that would start an interactive process on the graphics server farm as a remote application from their desk, suspend the session while their process ran, and remotely connect again to check on that process from home.

Summary

When correctly architected, you create a network of computers that are consistently deployed and easily recreated should the need arise. More importantly, in managing multiple administrators, where a defined architecture exists and understood and supported by all, the efficiency gained allows the admin to work beyond the daily issues due to inconsistent deployment, promotes positive team dynamics and minimizes tribal knowledge.

1.1.2 - Network Based Administration

This section provides thoughts over the basics in designing a network based computing environment that require the fewest number of administrators to manage it.

Configuration Management

Configuration design and definition is at the core of good network architecture. I have experimented with what configuration elements are important, which should be shared and which should be maintained locally on each host. Whether an instance is virtual, physical or a container, these concepts apply universally.

Traditionally, there was a lot of apprehension to share both application and configuration over a network much less share application accessible data. I guess this comes from either people who cannot think 3 dimensionally or those whose background is solely administrating a Windows network of which the design has morphed from a limited stand-alone host architecture. Realistically today, if there was no network, we’d not be able to do much anyway. Developing a sustainable architecture surrounding the UNIX/Linux network is efficient and manageable. Managing security is a separate topic for discussion.

The first step in managing a network of open system computers is to establish a federated name service with the purpose of managing user accounts and groups as well as provide a common reference repository for other information. I have leveraged NIS, NIS+ and LDAP as name service through the years. I favor LDAP since the directory server provides a better system for redundancy and service delivery, particularly on a global network. MS Windows Active Directory can be made to work on UNIX/Linux hosts by enabling SSL, make some security rule changes and adding the schema supporting open systems. The downside to Active Directory compared to a Netscape based directory service is managing the schema. On Active Directory, once the schema has been extended, you cannot rescind the schema unless you rebuild the entire installation from scratch. To date, I have yet to find another standardized directory service that will accomodate the deviations that Active Directory provide an MS network.

In a shop where there are multiple flavors of open systems, there have been ways that I have leveraged automounter to store binaries that are shared on a given OS platform/version. Leveraging on NAS storage such as Netapp, replication can be performed across administrative centers that can be used universally and maintained from one host. For the 5 hosts I maintain at home, I have found TruNAS Core (formerly FreeNAS) to be a good opensource solution to deliver shared data to my Linux and OSX hosts.

Common Enterprise-Wide File System Taxonomy

The most cumbersome activity toward setting up a holistic network is deciding on what utilities and software is to be shared across the network from a single source. Depending on the flavor, the path to the binary will be different. Further, the version won’t be consistent between OS versions or platform. Having a common share to provide for scripting languages such as Perl or Python help to provide a single path to reference in scripting, including plugin inclusion. It requires some knowledge on how to compile and install opensource software. More architectural discussion is included in the article User Profile and Environment over how to manage the same look and feel though different over the network.

Along with managing application software across a network, logically the user home directory has to be shared from a NAS. Since the user profile is stored in the home directory, it has to be standardized generically to function on all platforms and possibly versions. Decisions are needed for ordering the PATH and whether structure is needed in the profile to extend functionality to provide for user customizations or local versus global network environments. At a minimum, the stock user profile must be unified so that it can be managed consistently over all the user community, possibly with the exception of application software related administration accounts that are specific to the installation of a single application.

Document, Document, Document!

Lastly, it is important to document architecture and set standards for maintaining a holistic network as well as provide a guide to all administrators that will provide consistency in practice.

These links below provide more detail toward what I have proven in architecting and deployment of a consistent network of open systems.

1.1.2.1 - Federated Name Services - LDAP

Federated name services have evolved through the years. Currently LDAP is the current protocol driven service that has replaced legacy services such as NIS and NIS+. There are many guides over what is LDAP and how to implement LDAP directory services. This article discusses about how to leverage LDAP for access control in a network of open system hosts with multiple user and admin groups in the enterprise.

Introduction

What is a Federated Name Service? In a nutshell, it is a service that is organized by types of reference information such as in a library. There are books in the library of all different types and categories. You select the book off the shelf that best suits your needs and read the information. The “books” in an LDAP directory are small bits of data that is stored in a database on the backend and presented in a categorical/hierarchical form. This data is generally written once and read many times. This article is relative to open systems, I will write another article over managing LDAP services on Microsoft’s Active Directory that can also service open systems.

Design Considerations

Areas for design beyond account and user groups, are for common maps such as host registration supporting Kerberos or for registering MAC addresses that can be used as a reference point for imaging a host and setting the hostname. Another common use is for a central definition of automount maps. Depending on how one prefers to manage the directory tree, organizing separate trees that support administration centers that house shared data made the most sense to me with all accounts and groups stored in a separate, non-location based tree.

A challenge with open systems and LDAP is how to manage who can log in where. For instance, you don’t want end users to log into a server when they only need to consume a port based service delivered externally to that host. Possibly on a server, you may need to see all the users of that community but not allow them to login. This form of “security” can be managed simply by configuring both the LDAP client and the LDAP directory to match on a defined object’s value.

To provide an example, let’s suppose our community of users comprised of Joe, Bob, Mary, and Ellen. Joe is the site administrator and should have universal access to all hosts. Bob is member of the marketing team where Mary is an application administrator for the marketing team and Ellen is a member of the accounting team.

On the LDAP directory, you’ll need to use an existing object class/attribute or define a new one that will be used to give identity to the “access and identity rights”. If you are leveraging off of an existing defined attribute, that attribute has to be defined as a multi-value attribute since one person may need to be given multiple access identities. For the sake of this discussion, let’s say we add a custom class and the attribute “teamIdentity” to handle access and identity matching that is also added to the user objects (user objects can be configured to include multiple object classes as long as they have a common attribute such as cn).

On the client side, you will be creating a configuration to bind and determine which name service maps will be used out of the directory service. As a part of the client configuration, you can create an LDAP filter that is used when client queries the directory service to only return information that passes the filter criteria. So included in mapping what directory server and what the basedn and leaf for making a concise query into the directory should be, you append a filter that designates further filters a single or multiple matches on an attribute/value pair. For user configuration, there are two configuration types to define: user and shadow databases. The “user” is used to configure for “who should be visible as a user” on the host. The “shadow” is used to configure for who can actually login directly to the host. When the NSS service operates locally on the host, its local database cache will contain the match of users according to the common data values matched between the directory server and the client configuration and filter object/attribute/values. The challenge here is more on functional design around what values are created and for what purpose do they serve. Another custom class may be wise to put definition and ultimately control around what attribute values can be added to the user object. Unless you create definition and rules in your provisioning process, any value (intended, typo, etc.) can be entered.

To bring together this example, let’s suppose that this is the directory definition and content around our user community:

| User/Object |

teamIdentity/Value |

| Joe |

admin |

| Bob |

marketing-user |

| Mary |

marketing-sme |

| Ellen |

accounting-user |

| John |

marketing |

Let’s say we have these servers configured as such:

| Server (LDAP Client) |

Purpose |

Client Filter - Passwd |

Client Filter - Shadow |

| host01 |

Network Monitoring |

objectclass=posixAccount |

objectclass=posixAccount,objectclass=mycustom,teamIdentity=admin |

| host02 |

Marketing Services |

objectclass=posixAccount,objectclass=mycustom,teamIdentity=admin,teamIdentity=marketing-user,teamIdentity=marketing-sme |

objectclass=posixAccount,objectclass=mycustom,teamIdentity=admin,teamIdentity=marketing-sme |

| host03 |

Accounting Services |

objectclass=posixAccount,objectclass=mycustom,teamIdentity=admin,teamIdentity=accounting-user |

objectclass=posixAccount,objectclass=mycustom,teamIdentity=admin,teamIdentity=accounting-sme |

Here is how each user defined in the directory server will be handled on the client host:

| Server (LDAP Client) |

Identifiable as a User |

Able to Login |

| host01 |

Everyone |

Joe only |

| host02 |

Joe, Bob, Mary |

Joe, Mary |

| host03 |

Joe, Ellen |

Joe |

Notice that for host03, there was a “teamIdentity=accounting-sme” defined as part of the filter. Since Ellen exists in the directory service with the attribute “accounting-user” assigned, she will be visible as a user, but not able to login. Conversely, if there was a user in the directory service configured for the “teamIdentity=accounting-sme”, they would not be able to log in since you have to be identifiable as a user before you can authenticate. One last observation, John is configured for “teamIdentity=marketing”. Since that value is not configured in the client filter, John will not be identifiable or able to login to host02.

For more information over the LDIF syntax see Oracle documentation. For more information over client configuration, you’ll have to dig that out of the administration documentation for your particular platform/distro.

1.1.2.2 - User Profile and Environment

This article discusses considerations in designing and configuring a user profile supporting the OS and application environments. There are different aspects to consider in tailoring the user environment to operate holistically in an open systems network. One major architectural difference between open systems and MS Windows is that applications, for the most part are dependent on a local registry database that a packaged application plants its configuration. Historically with traditional UNIX environments, there is only a text file that contains it’s configuration information, whether a set of key/value pairs or a simple shell file assigning values to variables.

Overview

More modern versions of UNIX, including Linux has implemented a packaging system in order to inventory locally installed OS and software components. These systems only store meta data as opposed to being a repository for configuration data that provides structure for the inventory of software installed and related dependencies. Overall, there is no “registry” per se as in a Windows environment where the local registry is required. Execution is solely dependent on a binary being executed through the shell environment. The binary can be stored locally or on a network share and effectively be executable. The argument against this execution architecture is over control and security for applications running off a given host since realistically a user can store an executable in their own home directory and execute it from that personal location. This can be controlled to a certain extent though not completely by restricting access to compilers, the filesystem and means to external storage devices.

Consideration for architecting the overall operating environment can be categorized in these areas:

- Application or service running on the local host and stored somewhere

- OS or variants due to the OS layout and installation

- Aspects directly related to work teams

- Aspects related to the personal user computing experience.

Each of these areas need to be a part of an overall design for managing the operating environment and user experience working in the operating environment.

Application Environment

The most easily is managing the application environment. The scope is fairly narrow and particular to a single application to execute on a single host. Since there is no common standard around setting the process environment and launching an application, there is a need for the application administrator to establish a standard for managing how the environment is set and launched - i.e. provide a wrapper script around each application, executed from a common script directory. With purchased applications, they may or may not provide context around their own wrapper. Having a common execution point makes it easier to administrate, particularly when there is software integrated with others. I’ve seen some creative techniques where a single wrapper script is used that sources its environment based on an input parameter on the wrapper script. These generally, though logical, become complicated since there are as many variations for handling the launch of an application as there are application developers.

All OS’s have a central shell profile ingrained into the OS itself depending on the shell. I have found that it is best to leave these alone. Any variations that is particular to the OS environment due to non-OS installation on the local host needs to be managed separately and that aspect is factored into the overall user or application execution environment. Another kink with managing a network of varying OS variants is providing a single profile that compensates for the differences between OSs. For example a common command might be located in /usr/bin on one OS variant but exist in /opt/sfw/bin on another. Based on the OS variant, the execution path would need to factor in those aspects that are unique to that variant.

Work teams may have a common set of environment elements that are particular only to their group but should be universal to all members of that team. This is another aspect to factor into the overall profile management.

User Profile and Environment

Finally, the individual user has certain preferences such as aliases they desire to define and use that apply only to themselves. From a user provisioning standpoint, a template is used to create the user oriented profile. The difficulty is in the administration of a network of users who all wind up with their own version of the template first provisioned into their home directory. This complicates desktop support as profiles are corrupted or become stale with the passage of time. I have found it wise to provide a policy surrounding maintaining a pristine copy of the templated profile in the user’s home directory but provide a user exit to source a private profile where they can supplement the execution path or set aliases. A scheduled job can be run to enforce compliance here but only after the policy is adopted and formalized with the user community.

Architecture and Implementation

The best overall architecture that I have wound up with is a layered approach with a set priority that provides for more granular the precedence based on how far down the priority stack of execution. In essence, the lower down the chain, the greater influence that layer has on the final environment going from the macro to the micro. Here are some diagrams to illustrate this approach.

Logical Architecture

Execution Order

The profile is first established by the OS defined profile whose file that is sourced is compiled into the shell binary itself. The location of this file varies according to the OS variant and how the shell is configured for compilation. The default user-centric profile is located in the home directory using the same hidden file name across all OS variants. It is the user profile file that is the center for constructing and executing the precedence stack. With each layer, the last profile pragmatically will override the prior layer as indicated in the “Logical” diagram. Generally there is little need for the “Local Host Profile”. It is optional and only needed when on a standardized location on the local host a profile is created (e.g. /usr/local/etc/profile).

See the next article “Homogenized Utility Sets” for more information surrounding the “Global” and “Local” network file locations and their purpose. This will give perspective around these shared filesystems.

1.1.2.3 - Homogenized Utility Sets

An article that talks about utilities that can be shared between all open system variants, the difficulties to watch out for and elements to consider in the design. Ultimately a shared file system layout is needed that presents a single look and feel across multiple platforms but leverages off of a name service and the use of “tokens” embeded in the automount maps to mount platform specific binaries according to the local platform. This article is complimentary to the previous article “User Profile and Environment”. Topics include: Which Shell?, Utilities and Managing Open Source.

Which Shell?

In short, CSH (aka C Shell) and its variants aren’t worth messing with unless absolutely coerced. I have found explainable and repeatable bugs that make no sense with CSH. There is quite a choice for Bourne shell variants. I look for the lowest common equivalent between the OS variants.

KSH (aka Korn Shell) is a likely candidate since it has extended functionality beyond the Bourne Shell, but is difficult to implement since there are several versions across platforms. Those extended features make it difficult to code one shell script to be used across all platforms.

I have found that Bash is the most widely supported at the same major version that can be compatibly used out-of-box across the network. The last thing I would care to do is re-invent the wheel of a basic foundational component of the OS. It is suitable for the default user shell as well as a rich enough function set for shell scripting.

Utilities

Working with more than one OS variant will present issues for providing consistent utilities such as Perl, Python, Sudo, etc. since these essential tools are at various obsolete versions out of box. As well as managing a consistent set of plugin modules can be difficult to maintain (e.g. Perl and Python), especially when loaded on each individual host in the network. I have found it prudent to download the source for these utility software along with desirable modules that provide extended utility and compile them into a shared file system per platform type and version. The rule of thumb here is if all your OS variants sufficiently support an out-of-box version, then use the default; if not, compile it and manage it to provide consistency in your holistic network.

Managing Open Source Code

Granted binary compatibility doesn’t cross OS platform and sometimes does not cross OS version, I have found it is easier to compile and manage my homogeneous utility set on each OS variant and share it transparently across the network leveraging off of the automounter service. First, let’s look at a structure that will support a network share for your homogeneous utility set.

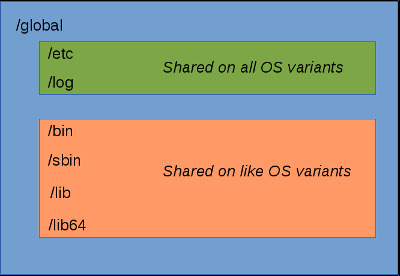

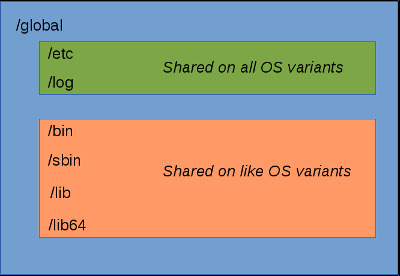

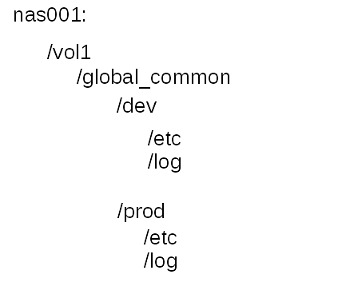

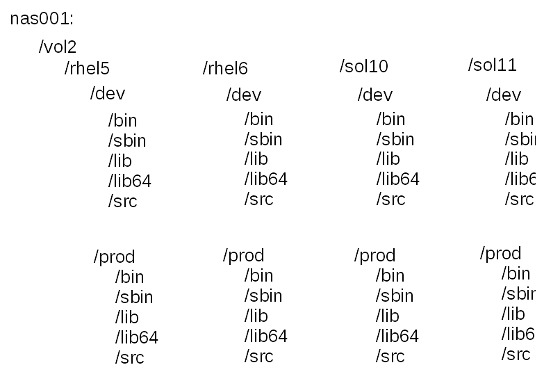

There are binary, configuration and log data on a filesystem to be shared. Below is a diagram for implementing a logical filesystem supporting your homogeneous utility set.

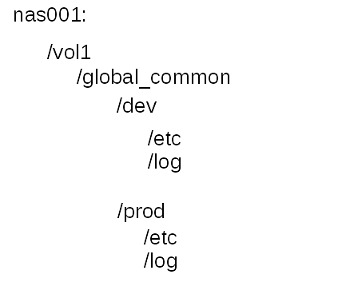

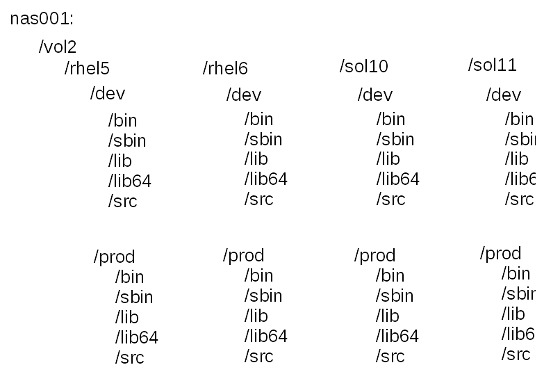

I create the automount map supporting this directory structure with embedded tokens on the “Shared on like OS variant” subdirectories that give identity to the OS variant. The size is fairly small. I simplify by storing all these mounts on the same volume. By doing this, you can replicate between sites, which will yield a consistent deployment as well as provide for your disaster recovery plan. I also provide for a pre-production mount as well. The “Shared on all OS variants” exist on a shared filesystem that is replicated for disaster recovery, but not used at other sites. Below is a sample for structuring the filesystem share.

Shared on All Hosts

Shared on All Like OS Variants

Here is a sample indirect automap defining the key value pairs supporting mount point /global that is stored in the “auto.global” map.

| Key |

Value |

etc |

nas001:/vol1/global_common/$ENVN/etc |

log |

nas001:/vol1/global_common/$ENVN/log |

bin |

nas001:/vol2/$OSV/$ENVN/bin |

sbin |

nas001:/vol2/$OSV/$ENVN/sbin |

lib |

nas001:/vol2/$OSV/$ENVN/lib |

lib64 |

nas001:/vol2/$OSV/$ENVN/lib64 |

Embedded tokens are resolved through the client automounter configuration. For Linux this is done either in the /etc/auto.master file or in the /etc/sysconfig/autofs (RedHat). This is a sample for configuring in the /etc/auto.master configuration file.

/global -DOSV=rhel5,-DENVN=prod auto.global

This is what would be added to /etc/sysconfig/autofs configuration file. Note that this affects all entries where /etc/auto.master affects only the single map referenced.

2 - Software Languages

I developed a passion for software development in college. Transitioning from being an application developer into an OS administrator, I particularly enjoyed using simple programming tools to perform repetitive functions or easily simplify what would alternatively be a series of complex operations into something that can be reduced into something simple. This section contains articles over some of the scripting/interpreted languages I have used.

2.1 - Perl - The Swiss Army Knife for the Administrator

Discussion and techniques used in working with Perl as an administrator.

Introduction

Eventually, the administrator will need to take a pile of text oriented data and “dice and mince” it in order to evaluate it or format it into a simple report. Shell scripting is a bit crude, but handles dicing up single lines of output from some command and culling out information in order to use it in some other form.

Perl is an excellent “Swiss Army Knife” of the script oriented languages. It shines when you have the need for decomposing unstructured data in order to make sense of it and report on it. This is my first tool to go to for collapsing a collection of related data within groups of collections (e.g. parsing through LDIF or XML formatted data).

Perl is an evolving language though very mature that is still well supported. Object orientation is rather crude and a backport somewhere in version 4 or 5 though a foundational design feature in version 6. “Moose” is a package that was developed to simplify the usage of the klunky perl object orientation though version 6 (aka “Raku”) has been release and will likely deprecate “Moose”.

Perl is modular, it is highly and easily extendible. There is a very rich ecosystem at CPAN.org that actively supports packages that extend the base functional capability of Perl. The downside when comparing Perl with other robust scripting languages, is how it lags behind in the packages incorporated into the base installation that support modern needs.

My Experience

I’ve worked with Perl version 5 for a lot of years for performing data mincing operations. Most significantly I’ve interfaced with LDAP to extract directory information or used it to traverse a file system tree to perform a listing that summerize how much data is stored at each descending level with an overall summary at the top level.

I experimented with using the multi-threaded capability but found it didn’t yield much of a time saver when compared to a single thread run over the same data. It was likely a contention issue on the CPU, though it was thread ready.

Here are some other articles on Perl subjects:

2.1.1 - Evil Multidimensional Arrays

Traditionally, a developer has been conditioned to create temporary databases or temporary working files outside of program code to leverage “database oriented” operations. In Perl, the technique for leveraging arrays defined and stored inside another array is scary for the novice developer.

Introduction

It’s hard to wrap your head around having any type of array as an element inside another array. Using this technique is not impossible but requires some practice to master its use. After a few times of performing some simple applications of the technique such as multiple hashes stored in a list array whose elements are unique persons, it becomes a brain-dead technique to incorporate into your code and becomes an essential in leveraging the utility for sorting/traversing arrays. I will use this technique when the amount of data is light and relatively small and output is generally for utility reporting. Multidimensional arrays does consume memory, however memory is fairly abundant these days and can handle fairly substantive arrays.

Devil in the Details

Foundationally as a review, there are 3 types of variables:

- Scalar - A place in memory that stores either a literal or a reference to another place in memory (i.e. another variable).

- Array - Also known as a “list array”. An indexed referenced variable that holds one or more scalars. References are numeric and start with element “0”.

- Hash - A key/value array whose scalar value is referenced by an associated key value. This array type is unstructured though there is the utility “sort” operation for referencing the key values in alphanumeric order (of which itself is a list array of references that is returned for parsing).

At the outset, the basic approach I use in developing Perl code is to choose one data source that will seed a key, loading into a top level array. I then parse through other data sources and appropriately build off of the initial array structure, appending more arrays as appropriate. The type of array that I construct is dependent on how I need to parse it. With this technique, it helps me to break down the “data model” into consumable pieces and allows me to focus in on a more detail level without losing perspective of the whole “virtual data” landscape.

I am big on sufficient inline documentation in the code without regurgitating the code. This is especially important with multidimensional arrays once you incorporate more than 2 array levels, I find that it is important to insert in comments to document the array structures. This has saved me time in the long term when I have to come back and maintain the code, not to mention avoid horrors for someone else maintaining your code while describing you with a continuous stream of four letter words.

Practical examples where I have incorporated array in arrays include a simple case for storing key/values out of an LDIF with where each distinguished name (dn) is stored as an element in a top level list array. Where there are non-unique object keys in the LDIF (e.g. group members in a posixgroup object class), those hash values become a list array stored as the value in a hash element.

The most complicated example was where I needed to audit the “sudo” rights a user has. I accomplished this by parsing the sudoers file and associating the user, host, group and command alias sets together referentially with utility subfunctions that would dump detail out of the related array structure for an input reference. This involved loading individual array sets according to the alias type. Reporting then became modal for associating the rulesets together logically to report by user and what hosts and what commands they can run or by host and what users were authorized to run sudo and for what commands they were authorized. There were some limitations and assumptions here (e.g. how sudo handles group based rules) that the reporting could not accommodate, but this provided an 80% solution where there was no solution.

Here are a few “how-to’s” that gives detail instruction and examples for multidimensional arrays:

2.2 - Python

Python has become popular for rapid application development due to its readability and ease to maintain the codebase. It is modular and object oriented. Like Perl, you can extend core functionality with a host of add-on modules to make calls to a database, graphic interface library or interface with back-end web applications. Python, strangely, can be used for developing a small application or a large application such as AI. Another advantage is the ease for deployment across all platforms, including MS Windows.

Overview

Having programming experience that spans 20+ years, I was watching the popularity rise for Python. Already being proficient in other versatile script oriented languages that also includes some archaic UI library integrations, why should I bother with placing Python in my arsenal? I could see that those whose experience base in the C oriented languages could to rapidly learn a C-like language such as Python without requiring compilation before execution. I dug in to get a feel for the language and discern how it differentiates with the other languages I already learned.

[more articles comming in this subject area.]

2.3 - Shell Scripting

Here are a few articles over shell scripting. Shell scripting is very basic and is the basis for executing one or a sequence of commands on an operating system.

Overview

The “Shell” is the easiest of “languages” (if one could call it that) to learn. As a staple in adminstering an OS, it is a requirement for the administrator to be able to read and code shell scripts as it is the utility most used for OS installation and configuration as well as for application installation. There are several shells available on any operating system. The shell is a basic command that is executed when a user logs into a system. The shell is the mechanism that provides an “environment” that other commands or applications are executed during a user session.

Here are some articles that discuss the two basic shell types:

2.3.1 - Bourne Shell Scripting

There are variants of the Bourne Shell. The Bourne Shell (/bin/sh) was developed by Stephen Bourne at Bell Labs. It was released in 1979 with the release of version 7 of UNIX. Although it is used as an interactive command interpreter, meaning that the program is the principal program running when they login for an interactive session. It was also intended as a scripting language that would execute like a canned program using a framework of logic that executes other programs.

Introduction

There are several Bourne Shell variants available on open systems to perform rudimentary shell scripting. The traditional Bourne Shell (“sh”) has been fading in the sunset though it is lightweight for use by the OS. I have found that for the user environment, Bash (Bourne Again Shell) is the logical choice that is packaged on all open system platforms and consistently named (/usr/bin/bash). From a scripting standpoint, though I prefer Korn, Bash is universal enough and has a similar notion of the advanced Korn features, though not as robust (e.g. smart variable substitution options/functionality).

The Korn Shell was originally developed by David Korn, until about 2000, the source code was proprietary code licensed by AT&T. In about 2000, the code was released to open source. There are two significant versions released - KSH 1988 and KSH 1993. On Linux, an open source version pdksh is roughly the 1993 version. On proprietary UNIX systems, both the traditional Korn 1988 and 1993 are supplied, though not consistently named across OS variants as an executable.

What is the Purpose of the Shell?

The shell concept was originally developed as a way for an end user to start the execution of a program and run on top of the operating system. It was also a simple way to provide a simplistic code base to perform a stacked execution of commands to produce a desired end result. If you only need simple logic branching and don’t require computational math a shell script is suitable. A common mistake that scripters often do is parsing multiple lines out of an input source that have to be considered as one (e.g. parsing through an LDIF). Another tool should be used such as Perl or Python for advanced scripting.

Arrays are basic. There is only one type available - the indexed array. To replicate a key/value oriented array, you have to use two arrays that are loaded simultaneously. In parsing the array, you have to use script logic to parse the “key” array and use that index on the “value” array. There is no ability to perform fuzzy matches. You have to condition on the whole string for a match.

One last point to make is over environmental variable scope. Variables are all in the “global scope” unlike other scripting languages. That means that the contents of a named variable is universally available throughout the script using the same name. Other languages handle named variables locally within the scope of a function unless it is declared to be global. Where one function is subordinate to another function, it’s variables are inherited from its parent.

Here are a couple good tutorial and reference guides:

2.3.2 - C Shell

C Shell is an artifact that seems to never go away. This article is a technical rant. I would not recommend using it.

Introduction

C Shell was created by Bill Joy while he was a graduate student at University of California, Berkeley in the late 1970s. It has been widely distributed, beginning with the 2BSD release of the Berkeley Software Distribution (BSD) which Joy first distributed in 1978. It has been used as an interactive shell, meaning that it provides an interface for the user to execute other programs interactively. It also was intended to be used to execute as a pre-determined set of commands executed as a single program. There has been a cult following for /bin/csh usage, mainly due to the fact those people generally don’t know any other scripting language.

I’ve had to script with C Shell. From experience, I’ve found it to be quirky, with reproducible errors that are not true and working around them by embedding a comment just to get the code to be intrepeted correctly. The shell hasn’t been updated since the 1990s though the open source community has produced a parallel version that arguably has been modernized. When working in a multi-platform environment, the open source C Shell variants do not exist on proprietary UNIX systems. I avoid using C Shell altogether though some application developers still hold onto the shell as the wrapper around their compiled binaries.

Here is an article that was published by Bruce Barnett several years ago. The points that he and other contributors have made are still valid.

Top Ten Reasons Not to Use the C Shell

Written by Bruce Barnett

With MAJOR help from

Peter Samuelson

Chris F.A. Johnson

Jesse Silverman

Ed Morton

and of course Tom Christiansen

Updated:

- September 22, 2001

- November 26, 2002

- July 12, 2004

- February 27, 2006

- October 3, 2006

- January 17. 2007

- November 22, 2007

- March 1, 2008

- June 28, 2009

In the late 80’s, the C shell was the most popular interactive shell. The Bourne shell was too “bare-bones.” The Korn shell had to be purchased, and the Bourne Again shell wasn’t created yet.

I’ve used the C shell for years, and on the surface it has a lot of good points. It has arrays (the Bourne shell only has one). It has test(1), basename(1) and expr(1) built-in, while the Bourne shell needed external programs. UNIX was hard enough to learn, and spending months to learn two shells seemed silly when the C shell seemed adequate for the job. So many have decided that since they were using the C shell for their interactive session, why not use it for writing scripts?

THIS IS A BIG MISTAKE.

Oh - it’s okay for a 5-line script. The world isn’t going to end if you use it. However, many of the posters on USENET treat it as such. I’ve used the C shell for very large scripts and it worked fine in most cases. There are ugly parts, and work-arounds. But as your script grows in sophistication, you will need more work-arounds and eventually you will find yourself bashing your head against a wall trying to work around the problem.

I know of many people who have read Tom Christiansen’s essay about the C shell (http://www.faqs.org/faqs/unix-faq/shell/csh-whynot/), and they were not really convinced. A lot of Tom’s examples were really obscure, and frankly I’ve always felt Tom’s argument wasn’t as convincing as it could be. So I decided to write my own version of this essay - as a gentle argument to a current C shell programmer from a former C shell fan.

[Note - since I compare shells, it can be confusing. If the line starts with a “%” then I’m using the C shell. If in starts with a “$” then it is the Bourne shell.

Top Ten reasons not to use the C shell

- The Ad Hoc Parser

- Multiple-line quoting difficult

- Quoting can be confusing and inconsistent

- If/while/foreach/read cannot use redirection

- Getting input a line at a time

- Aliases are line oriented

- Limited file I/O redirection

- Poor management of signals and sub-processes

- Fewer ways to test for missing variables

- Inconsistent use of variables and commands.

1. The Ad Hoc Parser

The biggest problem of the C shell (and TCSH) it its ad hoc parser. Now this information won’t make you immediately switch shells. But it’s the biggest reason to do so. Many of the other items listed are based on this problem. Perhaps I should elaborate.

The parser is the code that converts the shell commands into variables, expressions, strings, etc. High-quality programs have a full-fledged parser that converts the input into tokens, verifies the tokens are in the right order, and then executes the tokens. The Bourne shell even as an option to parse a file, but don’t execute anything. So you can syntax check a file without executing it.

The C shell does not do this. It parses as it executes. You can have expressions in many types of instructions:

% if ( expression )

% set variable = ( expression )

% set variable = expression

% while ( expression )

% @ var = expression

There should be a single token for expression, and the evaluation of that token should be the same. They are not. You may find out that

% if ( 1 )

is fine, but

% if(1)

or

% if (1 )

or

% if ( 1)

Generates a syntax error. Or that the above works, if add a “!” or change “if” into “while”, or do both, you get a syntax error.

You never know when you will find a new bug. As I write this (September 2001) I ported a C shell script to another UNIX system. (It was my .login script, okay? Sheesh!) Anyhow I got an error “Variable name must begin with a letter” somewhere in the dozen files used when I log in. I finally traced the problem down to the following “syntax” error:

% if (! $?variable ) ...

Which variable must begin with a letter? Give up? Here’s how to fix the error:

% if ( ! $?variable ) ...

Yes - you must add a space before the “!” character to fix the “Variable name must begin with a letter” error. Sheesh!

The examples in the manual page don’t (or didn;t) mention that spaces are required. In other words, I provided a perfectly valid syntax according to the documentation, but the parser got confused and generated an error that wasn’t even close to the real problem. I call this type of error a “syntax” error. Except that instead of the fault being on the user - like normal syntax errors, the fault is in the shell, because the parser screwed up!

Sigh…

Here’s another one. I wanted to search for a string at the end of a line, using grep. That is:

% set var = "string"

% grep "$var$" < file

Most shells treat this as:

% grep "string$" <file

Great. Does the C shell do this? As John Belushi would say, “Noooooo!”

Instead, we get

Variable name must contain alphanumeric characters.

Ah. So we back quote (backslash) it.

% grep "$var\$" <file

This doesn’t work. The same thing happens. One work-around is

% grep "$var"'$' <file

Sigh…

Here’s another. For instance,

% if ( $?A ) set B = $A

If variable A is defined, then set B to $A. Sounds good. The problem? If A is not defined, you get “A: Undefined variable.” The parser is evaluating A even if that part of the code is never executed.

If you want to check a Bourne shell script for syntax errors, use “sh -n.” This doesn’t execute the script. but it does check all errors. What a wonderful idea. Does the C shell have this feature? Of course not. Errors aren’t found until they are EXECUTED. For instance, the code

% if ( $zero ) then

% while

% end

% endif

will execute with no complaints. However, if $zero becomes one, then

you get the syntax error:

while: Too few arguments.

Here’s another:

if ( $zero ) then

if the C shell has a real parser - complain

endif

In other words, you can have a script that works fine for months, and THEN reports a syntax error if the conditions are right. Your customers will love this “professionalism.”

And here’s another I just found today (October 2006). Create a script that has

#/bin/csh -f

if (0)

endif

And make sure there is no “newline” character after the endif. Execute this and you get the error

then: then/endif not found.

Tip: Make sure there is a newline character at the end of the last line.

And this one (August 2008)

% set a="b"

% set c ="d"

set: Variable name must begin with a letter.

So adding a space before the “=” makes “d” a variable? How does this make any sense?

Add a special character, and it becomes more unpredictable. This is fine

% set a='$'

But try this

% set a="$"

Illegal variable name.

Perhaps this might make sense, because variables are evaluated in double quotes. But try to escape the special character:

% set a="\$"

Variable name must contain alphanumeric characters.

However, guess what works:

% set a=$

as does

% set a=\$

It’s just too hard to predict what will and what will not work.

And we are just getting warmed up. The C shell a time bomb, gang…

Tick… Tick… Tick…

2. Multiple-line quoting difficult

The C shell complaints if strings are longer than a line. If you are typing at a terminal, and only type one quote, it’s nice to have an error instead of a strange prompt. However, for shell programming - it stinks like a bloated skunk.

Here is a simple ‘awk’ script that adds one to the first value of each line. I broke this simple script into three lines, because many awk scripts are several lines long. I could put it on one line, but that’s not the point. Cut me some slack, okay?

(Note - also - at the time I wrote this, I was using the old verison of AWK, that did not allow partial expressions to cross line boundries).

#!/bin/awk -f

{print $1 + \

2;

}

Calling this from a Bourne shell is simple:

#!/bin/sh

awk '

{print $1 + \

2;

}

'

They look the SAME! What a novel concept. Now look at the C shell version.

#!/bin/csh -f

awk '{print $1 + \\

2 ;\

}'

An extra backslash is needed. One line has two backslashes, and the second has one. Suppose you want to set the output to a variable. Sounds simple? Perhaps. Look how it changes:

#!/bin/csh -f

set a = `echo 7 | awk '{print $1 + \\\

2 ;\\

}'`

Now you need three backslashes! And the second line only has two. Keeping track of those backslashes can drive you crazy when you have large awk and sed scripts. And you can’t simply cut and paste scripts from different shells - if you use the C shell. Sometimes I start writing an AWK script, like

#!/bin/awk -f

BEGIN {A=123;}

etc...

And if I want to convert this to a shell script (because I want to specify the value of 123 as an argument), I simply replace the first line with an invocation to the shell:

#!/bin/sh

awk '

BEGIN {A=123;}

'

etc.

If I used the C shell, I’d have to add a \ before the end of each line.

Also note that if you WANT to include a newline in a string, strange things happen:

% set a = 'a \

b'

% echo $a

a b

The newline goes away. Suppose you really want a newline in the string. Will another backslash work?

% set a = 'a \\

b'

% echo $a

a \ b

That didn’t work. Suppose you decide to quote the variable:

% set a = 'a \

b'

% echo "$a"

Unmatched ".

Syntax error!? How bizarre. There is a solution - use the :q quote modifier.

% set a = 'a \

b'

% echo $a:q

a

b

This can get VERY complicated when you want to make aliases include backslash characters. More on this later. Heh. Heh.

One more thing - normally a shell allows you to put the quotes anywhere on a line:

echo abc"de"fg

is the same as

echo "abcdefg"

That’s because the quote toggles the INTERPRET/DON’T INTERPRET parser. However, you cannot put a quote right before the backslash if it follows a variable name whose value has a space. These next two lines generates a syntax error:`

% set a = "a b"

% set a = $a"\

c"

All I wanted to do was to append a “c” to the $a variable. It only works if the current value does NOT have a space. In other words

% set a = "a_b"

% set a = $a"\

c"

is fine. Changing “_” to a space causes a syntax error. Another

surprise. That’s the C shell - one never knows where the next surprise

will be.

3. Quoting can be confusing and inconsistent

The Bourne shell has three types of quotes:

"........" - only $, `, and \ are special.

'.......' - Nothing is special (this includes the backslash)

\. - The next character is not special (Exception: a newline)

That’s it. Very few exceptions. The C shell is another matter.

What works and what doesn’t is no longer simple and easy to understand.

As an example, look at the backslash quote. The Bourne shell uses the backslash to escape everything except the newline. In the C shell, it also escapes the backslash and the dollar sign. Suppose you want to enclose $HOME in double quotes. Try typing:

% echo "$HOME"

/home/barnett

Logic tells us to put a backslash in front. So we try

% echo "\$HOME"

\/home/barnett

Sigh.

So there is no way to escape a variable in a double quote. What about single quotes?

% echo '$HOME'

$HOME

works fine. But here’s another exception.

% echo MONEY$

MONEY$

% echo 'MONEY$'

MONEY$

% echo "MONEY$"

Illegal variable name.

The last one is illegal. So adding double quotes CAUSES a syntax error.

With single quotes, “!” character is special, as is the “~” character. Using single quotes (the strong quotes) the command

% echo '!1'

1: Event not found.

will give you the error

A backslash is needed because the single quotes won’t quote the exclamation mark. On some versions of the C shell,

echo hi!

works, but

echo 'hi!'

doesn’t. A backslash is required in front:

echo 'hi\!'

or if you wanted to put a ! before the word:

echo '\!hi'

Now suppose you type

% set a = "~"

% echo $a

/home/barnett

% echo '$a'

$a

% echo "$a"

~

The echo commands output THREE different values depending on the quotes. So no matter what type of quotes you use, there are exceptions. Those exceptions can drive you mad.

And then there’s dealing with spaces.

If you call a C shell script, and pass it an argument with a space:

% myscript "a b" c

Now guess what the following script will print.

#!/bin/csh -f

echo $#

set b = ( $* )

echo $#b

It prints “i2” and then “3”. A simple = does not copy a variable correctly if there are spaces involved. Double quotes don’t help. It’s time to use the fourth form of quoting - which is only useful when displaying (not set) the value:

% set b = ( $*:q )

Here’s another. Let’s saw you had nested backticks. Some shells use $(program1 $(program2)) to allow this. The C shell does not, so you have to use nested backticks. I would expect this to be

`program1 \`program2\` `

but what works is the illogical

`program1 ``program2``

Got it? It gets worse. Try to pass back-slashes to an alias You need billions and billions of them. Okay. I exaggerate. A little. But look at Dan Bernstein’s two aliases used to get quoting correct in aliases:

% alias quote "/bin/sed -e 's/\\!/\\\\\!/g' \\

-e 's/'\\\''/'\\\'\\\\\\\'\\\''/g' \\

-e 's/^/'\''/' \\

-e 's/"\$"/'\''/'"

% alias makealias "quote | /bin/sed 's/^/alias \!:1 /' \!:2*"

You use this to make sure you get quotes correctly specified in aliases.

Larry Wall calls this backslashitis. What a royal pain.

Tick.. Tick.. Tick..

4. If/while/foreach/read cannot use redirection

The Bourne shell allows complex commands to be combined with pipes. The C shell doesn’t. Suppose you want to choose an argument to grep.

Example:

% if ( $a ) then

% grep xxx

% else

% grep yyy

% endif

No problem as long as the text you are grepping is piped into the script. But what if you want to create a stream of data in the script? (i.e. using a pipe). Suppose you change the first line to be

% cat $file | if ($a ) then

Guess what? The file $file is COMPLETELY ignored. Instead, the script use standard input of the script, even though you used a pipe on that line. The only standard input the “if” command can use MUST be specified outside of the script. Therefore what can be done in one Bourne shell file has to be done in several C shell scripts - because a single script can’t be used. The ' while ' command is the same way. For instance the following command outputs the time with hyphens between the numbers instead of colons:

$ date | tr ':' ' ' | while read a b c d e f g

$ do

$ echo The time is $d-$e-$f

$ done

You can use < as well as pipes. In other words, ANY command in the Bourne shell can have the data-stream redirected. That’s because it has a REAL parser [rimshot].

Speaking of which… The Bourne shell allows you to combine several lines onto a single line as long as semicolons are placed between. This includes complex commands. For example - the following is perfectly fine with the Bourne shell:

$ if true;then grep a;else grep b; fi

This has several advantages. Commands in a makefile - see make(1) - have to be on one line. Trying to put a C shell “if” command in a makefile is painful. Also - if your shell allows you to recall and edit previous commands, then you can use complex commands and edit them. The C shell allows you to repeat only the first part of a complex command, like the single line with the “if” statement. It’s much nicer recalling and editing the entire complex command. But that’s for interactive shells, and outside the scope of this essay.

Suppose you want to read one line from a file. This simple task is very difficult for the C shell. The C shell provides one way to read a line:

% set ans = $<

The trouble is - this ALWAYS reads from standard input. If a terminal is attached to standard input, then it reads from the terminal. If a file is attached to the script, then it reads the file.

But what do you do if you want to specify the filename in the middle of the script? You can use “head -1” to get a line. but how do you read the next line? You can create a temporary file, and read and delete the first line. How ugly and extremely inefficient. On a scale of 1 to 10, it scores -1000.

Now what if you want to read a file, and ask the user something during this? As an example - suppose you want to read a list of filenames from a pipe, and ask the user what to do with some of them? Can’t do this with the C shell - $< reads from standard input. Always. The Bourne shell does allow this. Simply use

$ read ans </dev/tty

to read from a terminal, and

$ read ans

to read from a pipe (which can be created in the script). Also - what

if you want to have a script read from STDIN, create some data in the

middle of the script, and use $< to read from the new file. Can’t do

it. There is no way to do

set ans = $< <newfile

or

set ans = $< </dev/tty

or

echo ans | set ans = $<

$< is only STDIN, and cannot change for the duration of the script.

The workaround usually means creating several smaller scripts instead

of one script.

6. Aliases are line oriented

Aliases MUST be one line. However, the “if” WANTS to be on multiple lines, and quoting multiple lines is a pain. Clearly the work of a masochist. You can get around this if you bash your head enough, or else ask someone else with a soft spot for the C shell:

% alias X 'eval "if (\!* =~ 'Y') then \\

echo yes \\

else \\

echo no \\

endif"'

Notice that the “eval” command was needed. The Bourne shell function is more flexible than aliases, simpler and can easily fit on one line if you wish.

$ X() { if [ "$1" = "Y" ]; then echo yes; else echo no; fi;}

If you can write a Bourne shell script, you can write a function. Same syntax. There is no need to use special “\!:1” arguments, extra shell processes, special quoting, multiple backslashes, etc. I’m SOOOO tired of hitting my head against a wall.

Functions allow you to simplify scripts. Anything more sophisticated than an alias that would require function requires a separate csh script/file.

Tick..Tick..Tick..

7. Limited file I/O redirection

The C shell has one mechanism to specify standard output and standard error, and a second to combine them into one stream. It can be directed to a file or to a pipe.

That’s all you can do. Period. That’s it. End of story.

It’s true that for 90% to 99% of the scripts this is all you need to do. However, the Bourne shell can do much much more:

- You can close standard output, or standard error.

- You can redirect either or both to any file.

- You can merge output streams

- You can create new streams

As an example, it’s easy to send standard error to a file, and leave standard output alone. But the C shell can’t do this very well.

Tom Christiansen gives several examples in his essay. I suggest you read his examples. See http://www.faqs.org/faqs/unix-faq/shell/csh-whynot/

8. Poor management of signals and subprocesses

The C shell has very limited signal and process management.

Good software can be stopped gracefully. If an error occurs, or a signal is sent to it, the script should clean up all temporary files. The C shell has one signal trap:

% onintr label

To ignore all signals, use

% onintr -

The C shell can be used to catch all signals, or ignore all signals. All or none. That’s the choice. That’s not good enough.

Many programs have (or need) sophisticated signal handling. Sending a -HUP signal might cause the program to re-read configuration files. Sending a -USR1 signal may cause the program to turn debug mode on and off. And sending -TERM should cause the program to terminate. The Bourne shell can have this control. The C shell cannot.

Have you ever had a script launch several sub-processes and then try to stop them when you realized you make a mistake? You can kill the main script with a Control-C, but the background processes are still running. You have to use “ps” to find the other processes and kill them one at a time. That’s the best the C shell can do. The Bourne shell can do better. Much better.

A good programmer makes sure all of the child processes are killed when the parent is killed. Here is a fragment of a Bourne shell program that launches three child processes, and passes a -HUP signal to all of them so they can restart.

$ PIDS=

$ program1 & PIDS="$PIDS $!"

$ program2 & PIDS="$PIDS $!"

$ program3 & PIDS="$PIDS $!"

$ trap "kill -1 $PIDS" 1

If the program wanted to exit on signal 15, and echo its process ID, a second signal handler can be added by adding:

$ trap "echo PID $$ terminated;kill -TERM $PIDS;exit" 15

You can also wait for those processes to terminate using the wait

command:

$ wait "$PIDS"

Notice you have precise control over which children you are waiting for. The C shell waits for all child processes. Again - all or none - those are your choices. But that’s not good enough. Here is an example that executes three processes. If they don’t finish in 30 seconds, they are terminated - an easy job for the Bourne shell:

$ MYID=$$

$ PIDS=

$ (sleep 30; kill -1 $MYID) &

$ (sleep 5;echo A) & PIDS="$PIDS $!"

$ (sleep 10;echo B) & PIDS="$PIDS $!"

$ (sleep 50;echo C) & PIDS="$PIDS $!"

$ trap "echo TIMEOUT;kill $PIDS" 1

$ echo waiting for $PIDS

$ wait $PIDS

$ echo everything OK

There are several variations of this. You can have child processes start up in parallel, and wait for a signal for synchronization.

There is also a special “0” signal. This is the end-of-file condition. So the Bourne shell can easily delete temporary files when done:

trap "/bin/rm $tempfiles" 0

The C shell lacks this. There is no way to get the process ID of a child process and use it in a script. The wait command waits for ALL processes, not the ones your specify. It just can’t handle the job.

9. Fewer ways to test for missing variables

The C shell provides a way to test if a variable exists - using the $?var name:

% if ( $?A ) then

% echo variable A exists

% endif

However, there is no simple way to determine if the variable has a value. The C shell test

% if ($?A && ("$A" =~ ?*)) then

Returns the error:

A: undefined variable.

You can use nested “if” statements using:

% if ( $?A ) then

% if ( "$A" =~ ?* ) then

% # okay

% else

% echo "A exists but does not have a value"

% endif

% else

% echo "A does not exist"

% endif

The Bourne shell is much easier to use. You don’t need complex “if” commands. Test the variable while you use it:

$ echo ${A?'A does not have a value'}

If the variable exists with no value, no error occurs. If you want to add a test for the “no-value” condition, add the colon:

$ echo ${A:?'A is not set or does not have a value'}

Besides reporting errors, you can have default values:

$ B=${A-default}

You can also assign values if they are not defined:

$ echo ${A=default}

These also support the “:” to test for null values.

10. Inconsistent use of variables and commands.